Patrick's 데이터 세상

Sklearn Classifier 본문

반응형

SMALL

Sklearn에서 제공하는 Classifier 모듈을 활용하여 분류 모델을 만듭니다.

Task는 긍정, 중립, 부정 3개로 분류하는 감성 분류 모델입니다.

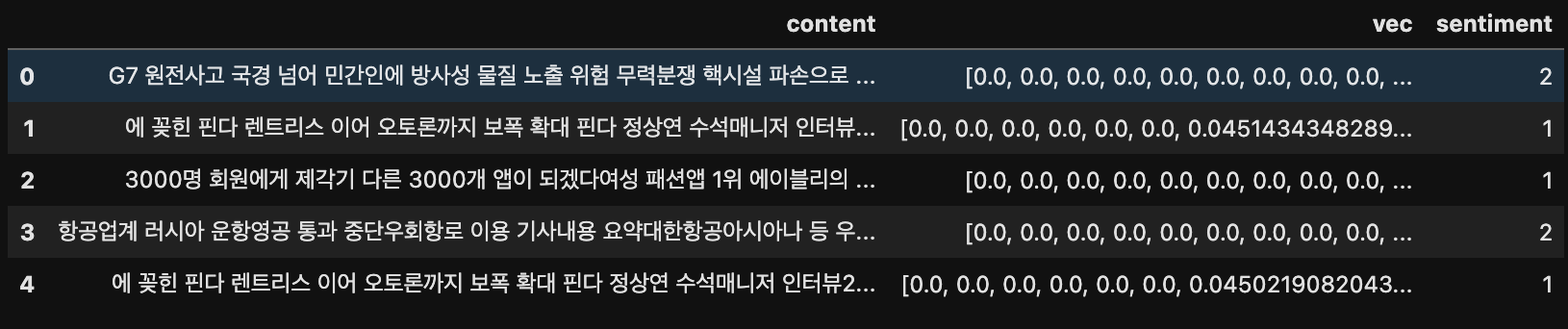

👉🏻 Data Load & Check

vectorize는 content 데이터로 TF-IDF vectorizing 한 'vec'을 활용하였습니다.

df.head()

👉🏻 Train Test Split

from sklearn.model_selection import train_test_split

train_data, test_data = train_test_split(df, test_size=0.2, random_state=42)

train_data = train_data.reset_index()

test_data = test_data.reset_index()

print(len(df))

print(len(train_data))

print(len(test_data))

👉🏻 Classfier

Sklearn 제공 분류 모델을 모두 활용하여 비교합니다.

# kNN

from sklearn.neighbors import KNeighborsClassifier

# Decision Tree

from sklearn.tree import DecisionTreeClassifier

# Random Forest

from sklearn.ensemble import RandomForestClassifier

# Naive Bayes

from sklearn.naive_bayes import GaussianNB

# SVM, Support Vector Machines

from sklearn.svm import SVCfrom sklearn.model_selection import KFold, cross_val_score

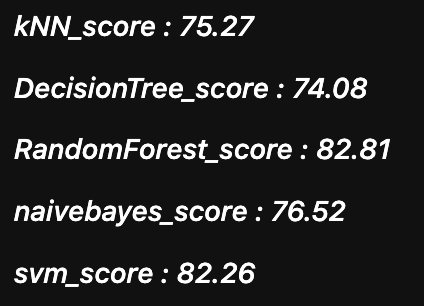

Score

kfold, cross_val_score로 훈련 테스트를 바꿔가며 train하는 교차검증으로 정확도를 비교합니다.

scoring = 'accuracy'

k_fold = KFold(shuffle = True, random_state=0)# knn

knn_clf = KNeighborsClassifier()

knn_clf = knn_clf.fit(train_vec, train_label)

knn_score = cross_val_score(knn_clf, train_vec, train_label, cv=k_fold, n_jobs=1, scoring=scoring)

# decision tree

dt_clf = DecisionTreeClassifier()

dt_clf = dt_clf.fit(train_vec, train_label)

dt_score = cross_val_score(dt_clf, train_vec, train_label, cv=k_fold, n_jobs=1, scoring=scoring)

# random forest

rf_clf = RandomForestClassifier()

rf_clf = rf_clf.fit(train_vec, train_label)

rf_score = cross_val_score(rf_clf, train_vec, train_label, cv=k_fold, n_jobs=1, scoring=scoring)

# naive bayes

nb_clf = GaussianNB()

nb_clf = nb_clf.fit(train_vec, train_label)

nb_score = cross_val_score(nb_clf, train_vec, train_label, cv=k_fold, n_jobs=1, scoring=scoring)

# svm

svm_clf = SVC()

svm_clf = svm_clf.fit(train_vec, train_label)

svm_score = cross_val_score(svm_clf, train_vec, train_label, cv=k_fold, n_jobs=1, scoring=scoring)print(f"kNN_score : {round(np.mean(knn_score) * 100, 2)}")

print(f"DecisionTree_score : {round(np.mean(dt_score) * 100, 2)}")

print(f"RandomForest_score : {round(np.mean(rf_score) * 100, 2)}")

print(f"naivebayes_score : {round(np.mean(nb_score) * 100, 2)}")

print(f"svm_score : {round(np.mean(svm_score) * 100, 2)}")

RandomForest model이 score가 가장 높으므로 채택.

👉🏻 RandomForest

from sklearn.ensemble import RandomForestClassifier

rf_clf = RandomForestClassifier()

rf_clf.fit(train_vec, train_label)

# labeling

test_pred = rf_clf.predict(test_vec)

test_data['label'] = test_predtest_data.head()

metrics

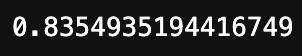

평가지표로 f1 score 채택.

from sklearn.metrics import f1_score

f1_score(test_data.sentiment, test_pred, average='micro')

👉🏻 GridSearchCV

GridSearch를 통해 좀 더 최적의 하이퍼파라미터를 튜닝해보겠습니다.

from sklearn.metrics import classification_report, f1_score, accuracy_score

from sklearn.model_selection import GridSearchCVparams = { 'n_estimators' : [100, 200],

'max_depth' : [6, 8, 10, 12],

'min_samples_leaf' : [8, 12, 18],

'min_samples_split' : [8, 16, 20]

}

# RandomForestClassifier 객체 생성 후 GridSearchCV 수행

rf_clf = RandomForestClassifier(random_state = 0, n_jobs = -1)

grid_cv = GridSearchCV(rf_clf,

param_grid = params,

cv = 3,

n_jobs = -1)

grid_cv.fit(train_vec, train_label)

print('최적 하이퍼 파라미터: ', grid_cv.best_params_)

print('최고 예측 정확도: {:.4f}'.format(grid_cv.best_score_))

최적의 하이퍼 파라미터로 재학습

rf_clf = RandomForestClassifier(n_estimators = 200, # 결정 트리 개수

max_depth = 12, # 트리의 최대 깊이

min_samples_leaf = 8, # 리프노드가 되기 위해 필요한 최소한의 샘플 데이터수

min_samples_split = 8, # 노드를 분할하기 위한 최소한의 샘플 데이터수(과적합을 제어하는데 사용)

random_state = 0,

n_jobs = -1)rf_clf.fit(train_vec, train_label)# labeling

test_pred = rf_clf.predict(test_vec)

test_data['label'] = test_pred

test_data.head()

f1_score(test_data.sentiment, test_pred, average='micro')

반응형

LIST

'Machine Learning > Classifier' 카테고리의 다른 글

| RandomForest (0) | 2022.04.07 |

|---|